Customer Feedback: Why It's Important & How We Collect it at Hunter

One important aspect of making your SaaS company thrive is to ensure that the product stays relevant and valuable to your customers.

You can have the best developers or the most dedicated support team; none of it will matter if your product isn’t in line with your customers’ needs.

At Hunter, we believe that the best way to create a great product is to stay in touch with our customers, collecting and considering their valuable feedback.

We always have customers who provide us with direct feedback on their own, but we also use proactive methods of collecting feedback.

In this blog post, we’ll show you how we collect customer feedback here at Hunter, as well as how we process and use it.

Collecting spontaneous feedback

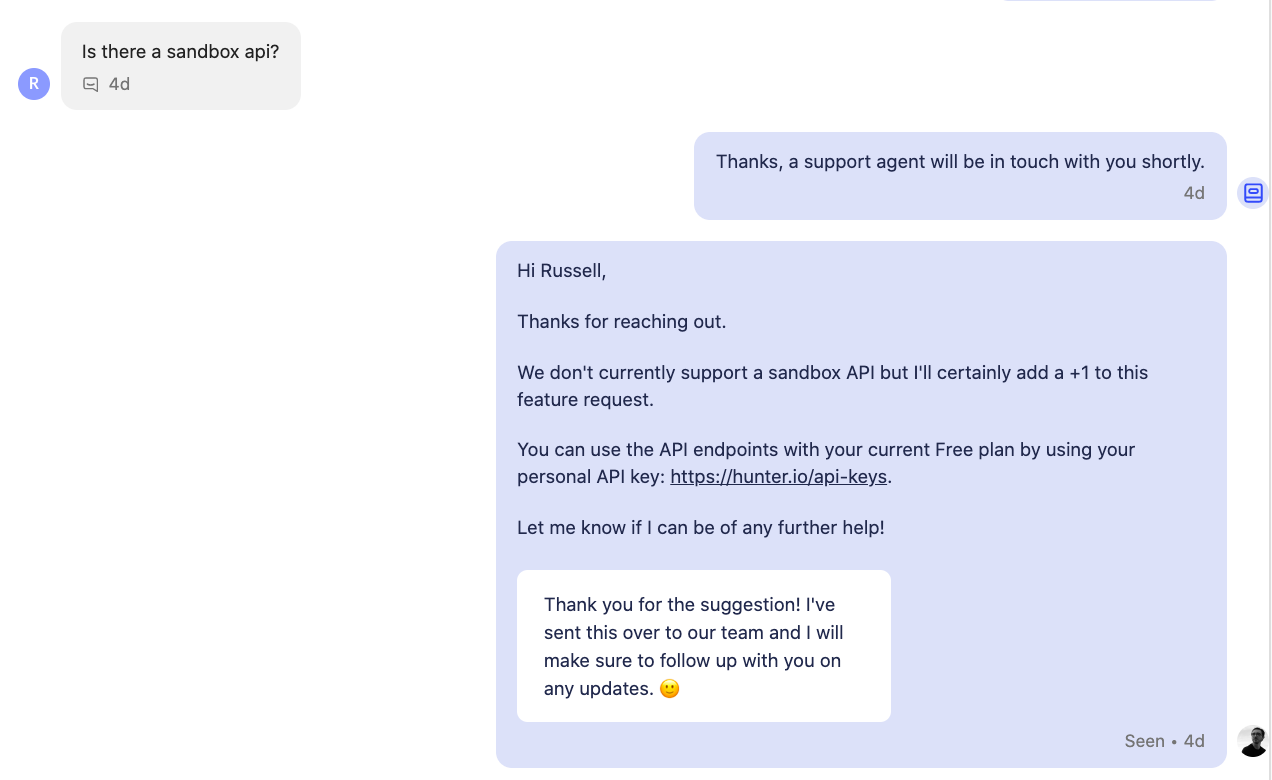

The most obvious way to collect feedback is through direct interaction while a support representative is helping a customer. At Hunter, whenever a customer asks for a specific feature when contacting us via chat or email, we mark it down in Acute, our customer feedback management tool of choice.

The rep who interacts with the customer gathers as much context and details as possible about the customer’s use case and the reason behind the suggestion. This helps our product team how relevant each suggestion is.

If we decide to implement the feature, this will also help the product team to design it in a way that’s most in line with our customer’s needs.

We thank the customer for the suggestion and let them know that this helps us decide the product’s direction. This step is crucial as we want our customers to feel heard, so they will want to come back with any further feedback they might have in the future.

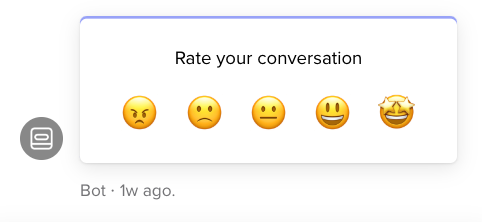

We also proactively ask for feedback from our customers at various stages of their customer journey. For example, after closing a support request, we automatically send the customer a quick survey through Intercom, which asks them to rate the help they received on a scale of 1 to 5 and to leave an optional comment.

This allows our team to react to any potential dissatisfaction and constantly improve the service we provide.

Additionally, this gives each of our support representatives a satisfaction score, which is calculated as the percentage of the conversations that have been rated with a 4 or 5 out of all the rated conversations.

During our Monday meeting, our support team reviews the past week’s performance, including any bad ratings: these are addressed directly with the rep involved in the conversation to brainstorm on possible improvements that could have generated a positive rating instead.

Our Head of Support also directly follows up on conversations with bad ratings to offer assistance: unhappy users usually have a positive reaction when a manager reaches out to apologize on behalf of a colleague.

This also allows us to restart the help process if the previous conversation wasn’t satisfactory.

On a team level, we also try to keep a high customer satisfaction score, aiming for 95% or above.

A decrease might indicate that it’s time to ask ourselves some questions about the quality and efficiency of our team. Do we have any situations we should handle differently? Do we have a big enough team to answer all requests promptly?

Feedback from embedded surveys

Another way we ask for feedback is directly through Hunter’s website while the customer is using the tool. We use SatisMeter to embed surveys directly on the website to collect feedback.

We use two main types of surveys, depending on the information we want to collect.

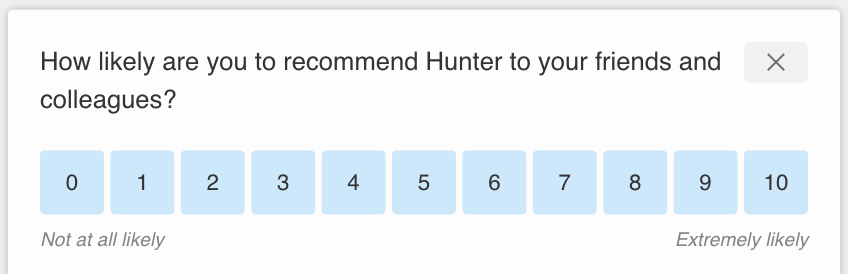

The first one is the Net Promoter Score survey, which measures how willing customers are to recommend the service to someone else. The score represents the percentage of customers that are likely to spread good word-of-mouth.

The survey asks the customer to rate how likely they are to recommend Hunter to someone else on a scale of 1 to 10, and to leave an optional comment.

Whenever a customer leaves a comment with their NPS rating, this creates a conversation in Intercom so a support representative can get back to them if needed.

For instance, if the customer complains of a recurring problem, we can help them directly, and if they make a feature suggestion, we thank them and let them know that their suggestion has been taken into consideration.

At Hunter, most of the feedback we get from NPS surveys is relatively generic, such as asking for better data. In those cases, we try to get more details from the customer and ask if they can provide some specific examples that can help us improve.

These surveys also give Hunter an overall NPS rating score, which is calculated by subtracting the percentage of detractors (ratings ranging from 0 to 6) from the percentage of promoters (ratings of 9 to 10).

The result is a number between -100 and 100. We aim for a score of +55 or higher and review this with the entire team every quarter.

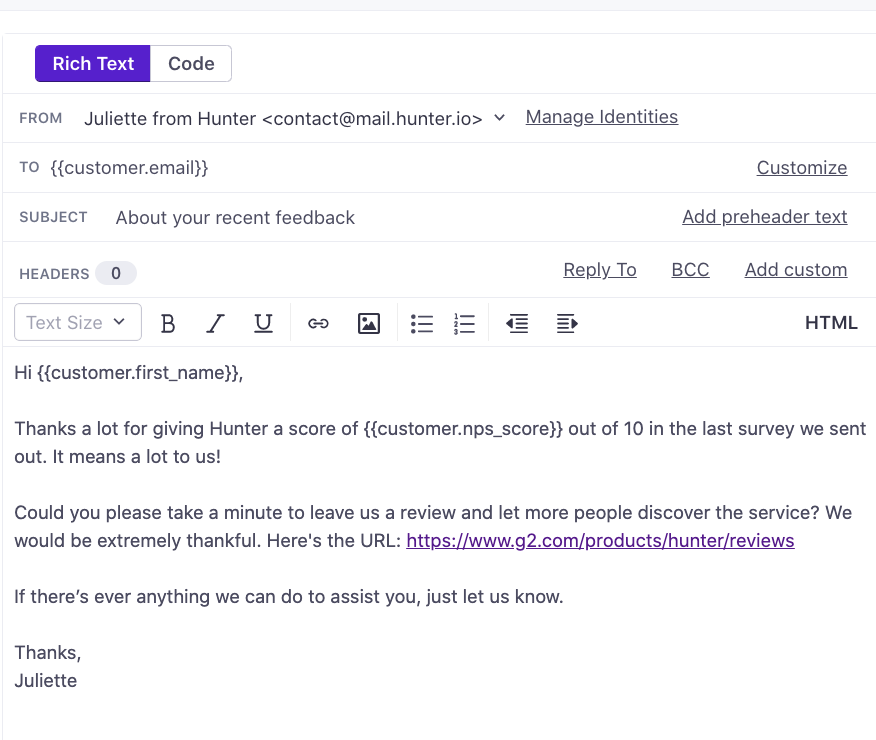

We like to follow up via email with the users that leave NPS ratings to get even more feedback.

Whenever we need to create more complex and automated email campaigns to follow up with our users, we use Customer.io.

It integrates all sorts of attributes and events with our list of users, which allows us to automatically follow up after a user completes an NPS survey, cancels a subscription, or leaves a conversation rating.

For instance, if a user gives an NPS rating without leaving a comment, we follow up via email to ask for more information. If they were a detractor (a score between 0 and 4), we ask if they experienced a particular issue or if an important feature was missing.

If they left a passive score (5 to 8), we ask what would be the one thing they would change to improve Hunter. Lastly, if they were promoters (a score of 9 or 10), we thank them for their continued support and suggest they leave a review on websites like G2 or Capterra.

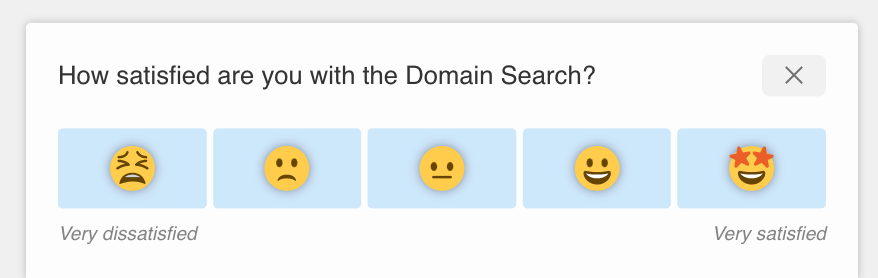

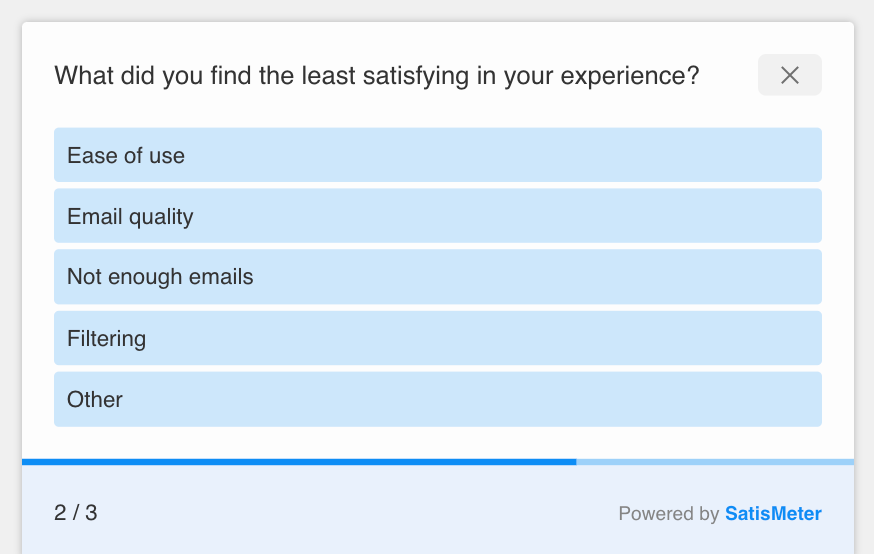

The second type of survey we use is the Customer Satisfaction Score survey, or CSAT, which helps us measure how satisfied users are with a specific tool or feature.

The CSAT survey asks users a single question: “How satisfied are you with [tool/feature]”, with answers ranging from 1 to 5.

We can also add more specific questions depending on the previous answer. For instance, if the user gave a score of 1 to 4, we ask what was their biggest issue with the tool or feature.

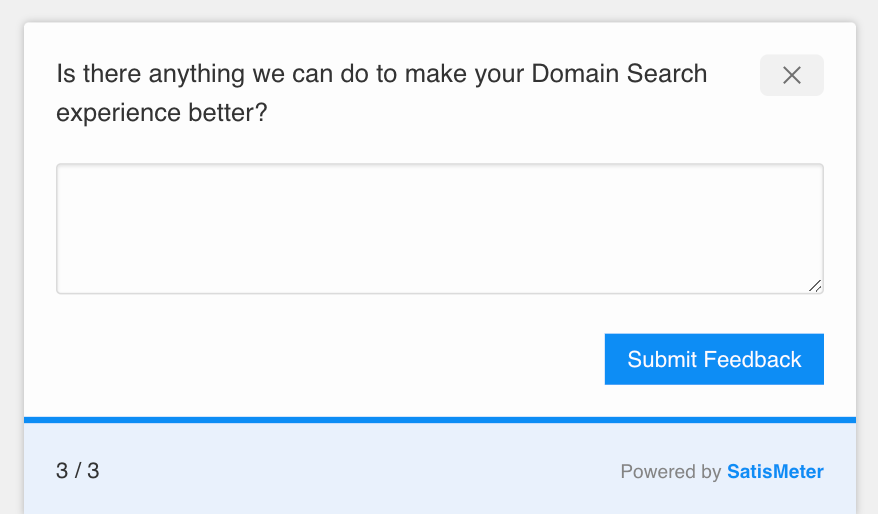

We also like to leave some space for additional feedback by finishing with an open-ended question.

Just like with the NPS surveys, answers with a comment are automatically sent to Intercom as new conversations.

We recently added CSAT surveys to better understand how our users react to our tools and bring targeted improvements.

They also make it easier to keep track of issues linked to a specific feature so that we can resolve them immediately. When we change something in the product, we can also check if there’s a change in the customer satisfaction score.

If the score improves, we know we did something right, and if it gets worse, we might revert the changes or try something different instead.

SatisMeter, the tool we use to add these surveys to our website, also allows for various configurations that make it easy for us to adapt or meet new goals.

For instance, we recently reduced the delay between surveys from twelve to four months to track the satisfaction of our users at least three times per year and keep track of possible pain points and improvements.

This increased the amount of feedback we get, generated more interaction and reviews, and provided us with a more detailed view of how our users’ satisfaction improves over time.

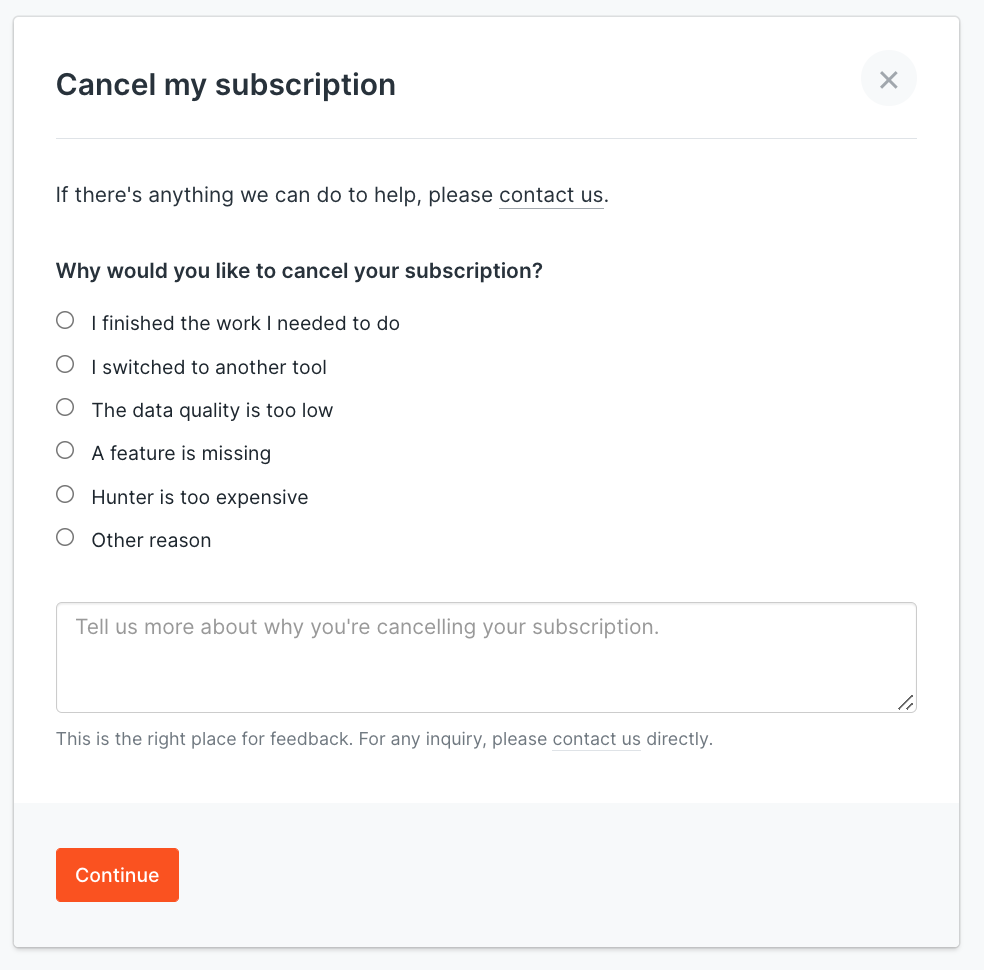

Cancelation feedback

Another time we ask for feedback from our customers is when they cancel a premium subscription. The customer could be canceling due to dissatisfaction with the service provided.

To give us a chance to make it up to the customer, gathering any insight at that moment is important. As soon as the customer selects the “Cancel” option, we ask what the main reason for this is, giving them a choice between a couple of frequent reasons and a space for a more detailed explanation.

A couple of hours after the customer has completed the cancelation procedure, we follow up with them via email to check if they can provide more information based on the specific cancelation reason they gave, and to put them in direct contact with a support rep.

For instance, if they mention that they weren’t satisfied with the amount or quality of data, we ask them if we could take a look at the data they acquired to check if anything could be improved.

Online reviews

What do you do before you make an online purchase? Most people look for reviews to see what past and present customers say about a product.

As our team constantly works on product development and releases new features and improvements frequently, we aim to collect continuous feedback.

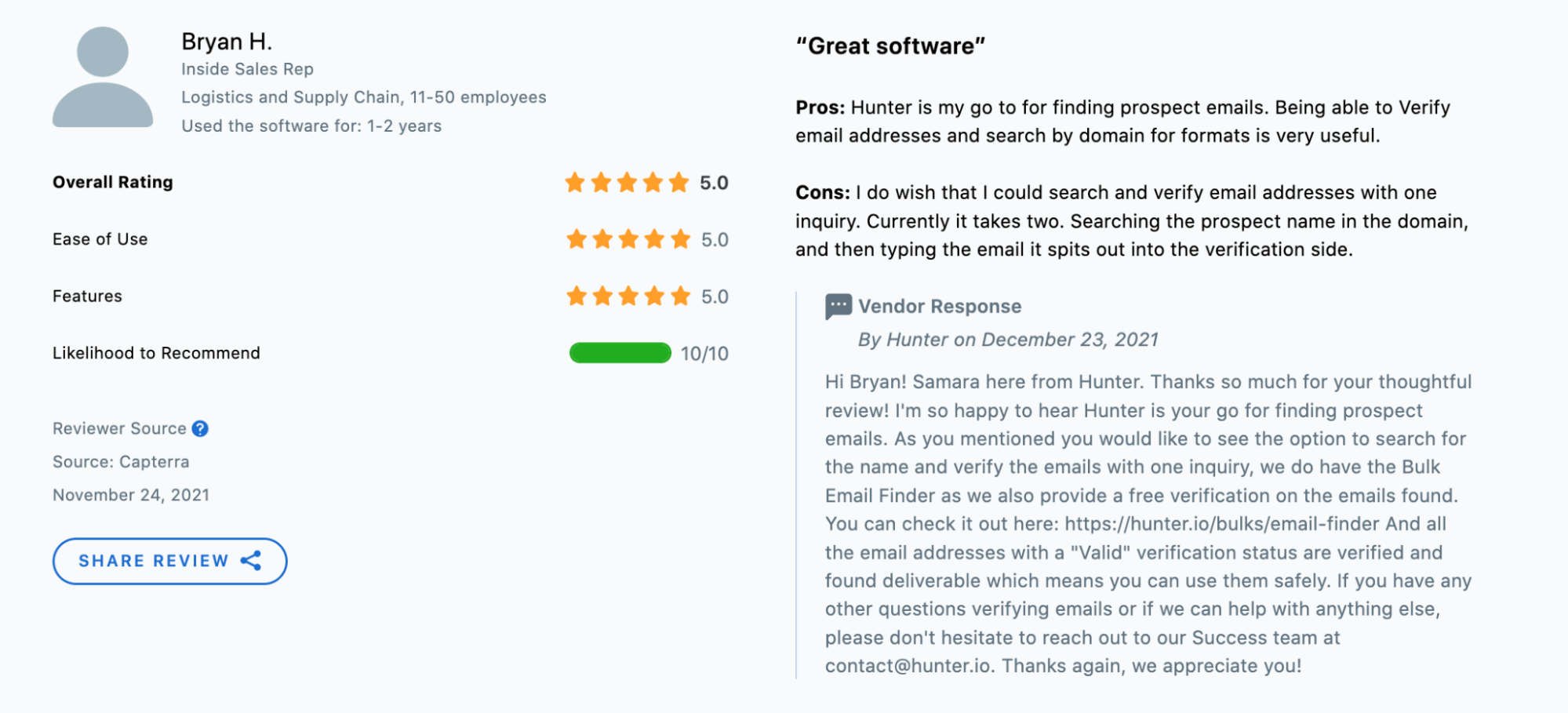

Some customers will leave reviews on their own online, but it’s also a good idea to ask customers to leave a review on one of these platforms.

For example, the day after we get an NPS review without written feedback, we send an email campaign to all the promoters that leave a high NPS rating (9 or 10), and we ask them to leave a review on one of many review platforms like Capterra, Trustpilot, and G2Crowd.

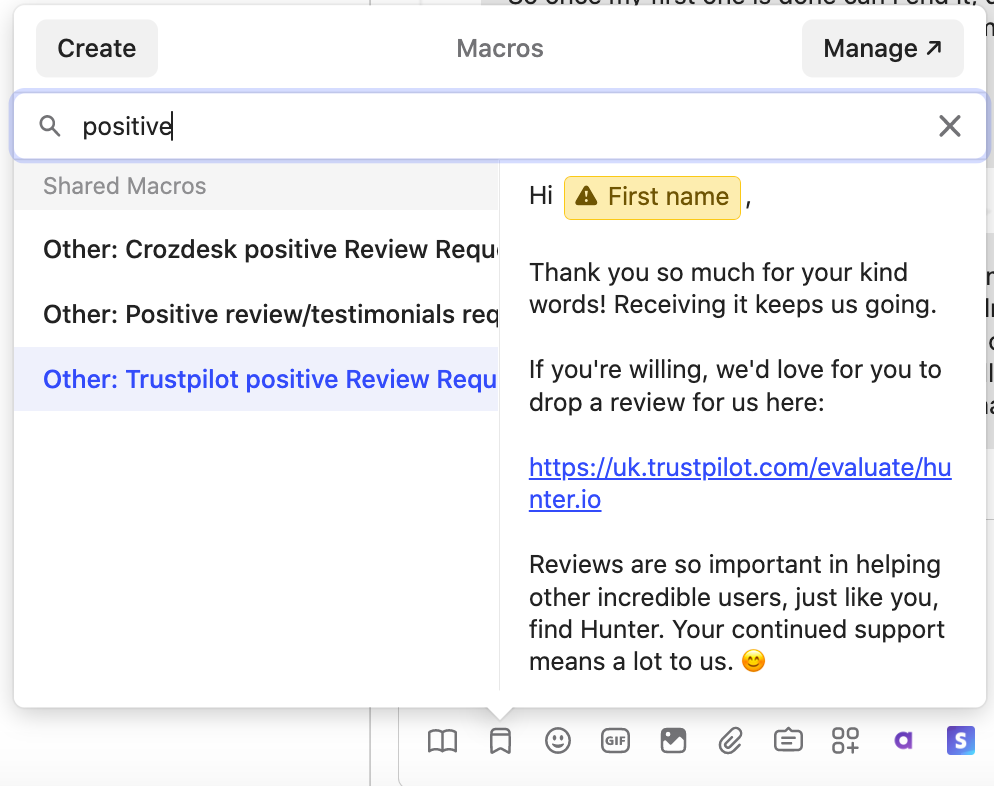

We also send a similar email one hour after a user rates a support conversation with a 5 out of 5 for the first time.

We monitor reviews weekly and directly answer the feedback we find there.

Having dedicated pages on these platforms brings new insights, such as feature ideas and user pain points. If some feature ideas are recommended in the reviews, we add any new ones to Acute.

We also leverage Intercom as we’ve created a saved reply to collect feedback when there's a customer we think could add a positive review after a good support interaction.

We monitor review scores every quarter to ensure they have an average rating of at least 4.5.

Extensive surveys and interviews

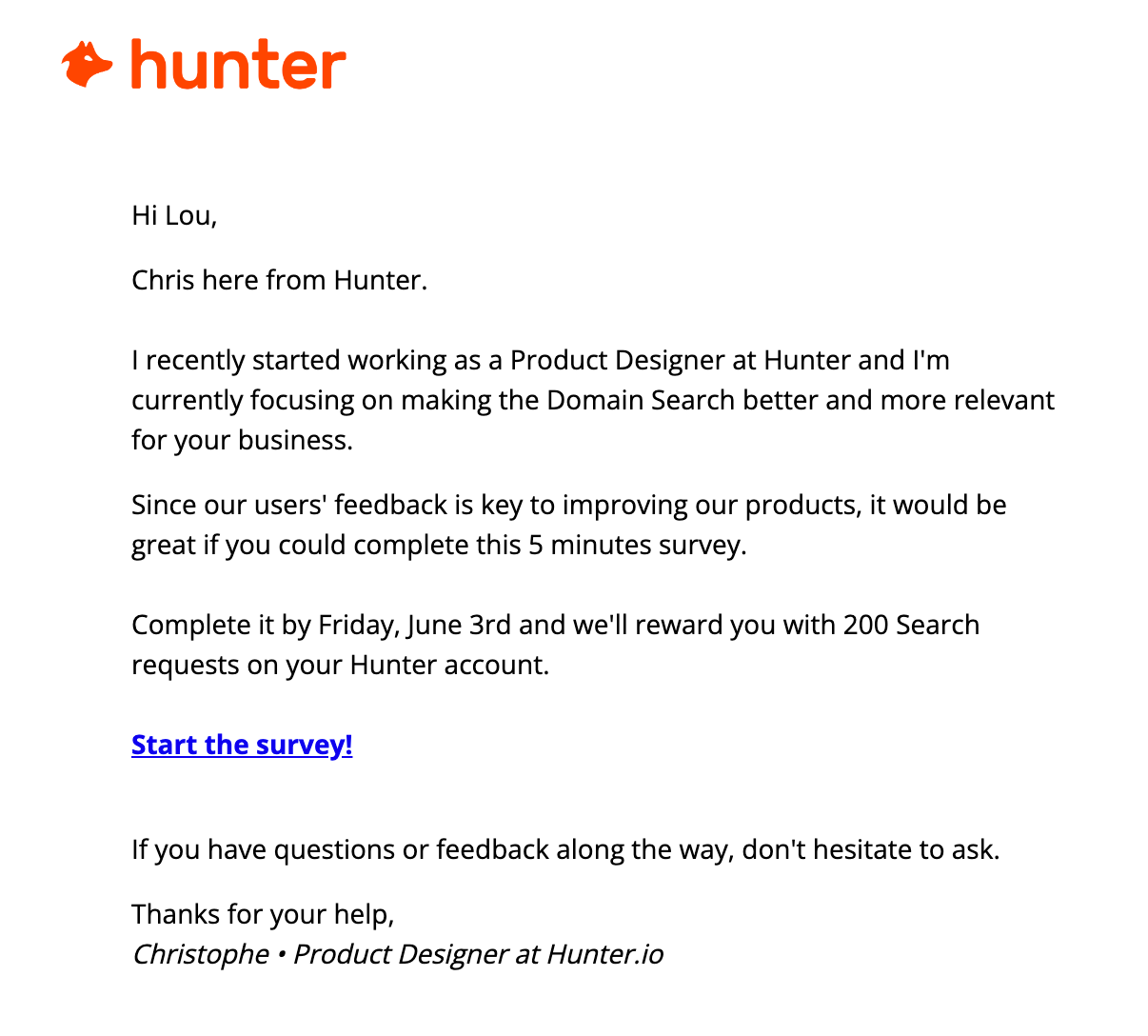

Lastly, we sometimes ask for more in-depth feedback through customer interviews or longer surveys. These interviews and surveys usually have a targeted purpose in mind, such as improving a specific part of the product or learning about our customers and their needs and goals.

Since we’re asking for much more information and time, we offer free perks or even small amounts of money in return for a completed survey or interview.

For example, we recently sent a more in-depth survey regarding Domain Search. The goal of the survey was to help us better understand how our customers currently experience the tool, what they like about it, and what they would change.

We asked multiple questions about their current usage of Domain Search to fully understand our customers' current experience with the tool.

Christophe, our Product Designer, analyzed all the answers as a starting point for a design enhancement project. This allowed him to know which aspects of the tool he should redesign or work on first. We even gleaned additional insight that will be used in future projects.

Collecting customer feedback in Intercom using Acute

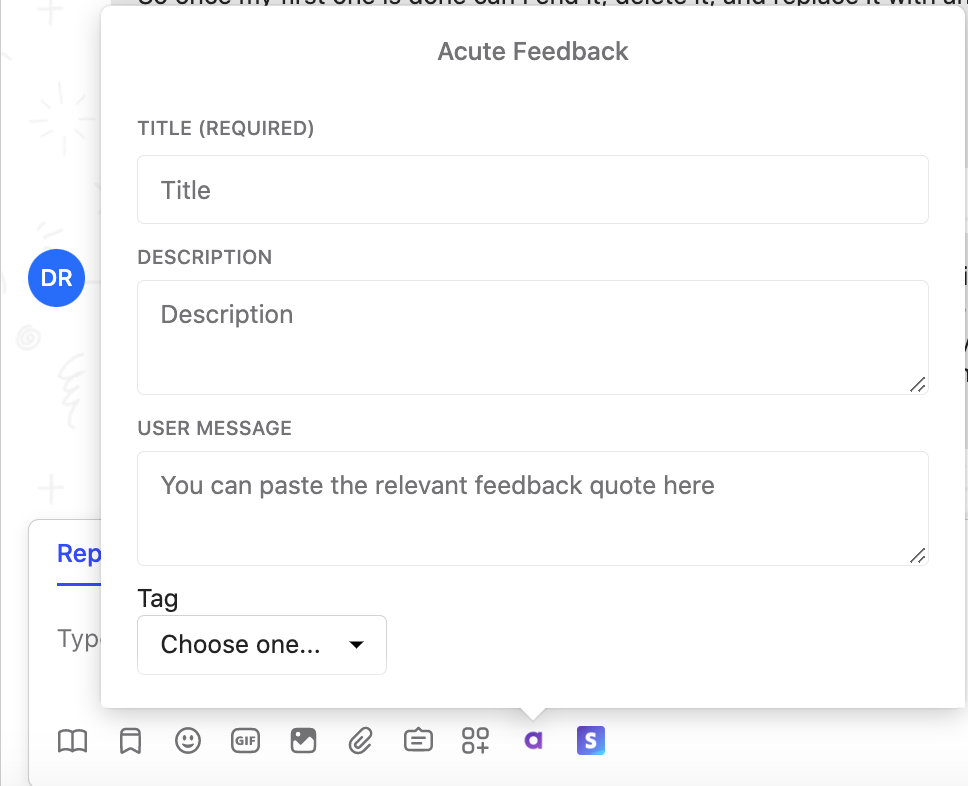

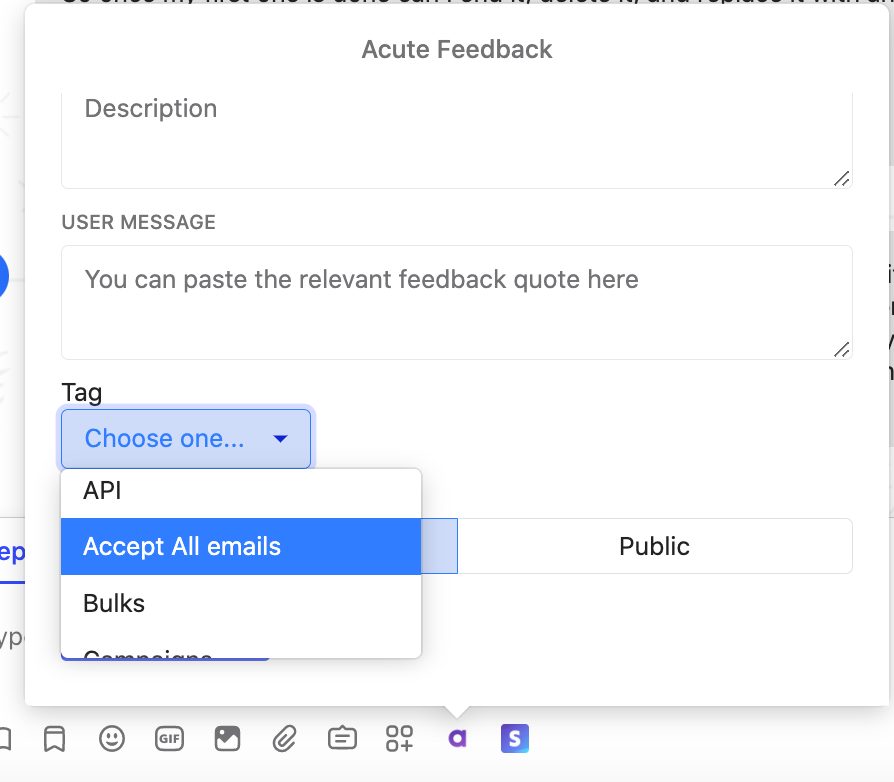

We use Acute to track all our user feature suggestions. It seamlessly integrates with Intercom and allows us to track feedback internally or publicly in a few clicks.

This means each team member can directly add the suggestion from an Intercom conversation so that we can keep track of the customers that made the suggestions. You can add a vote for a feature that's been previously suggested too.

When a customer reaches out to ask about a feature (whether to see if we offer it or to make the suggestion directly), we will try to gather as much information as possible about the use case to be sure to understand the need. This helps the product team understand the intention behind each use case.

The person who will read these suggestions and decide what to do with them may not be the same person who adds the information to Acute.

Therefore, it is important to add a detailed description to each feature request and to add the customer use cases if possible.

This will help prevent any misunderstandings down the line.

We add relevant tags based on the various product areas to make it easier to search and categorize feature requests. For example, in Hunter, we have Bulks, Campaigns, API, and so on.

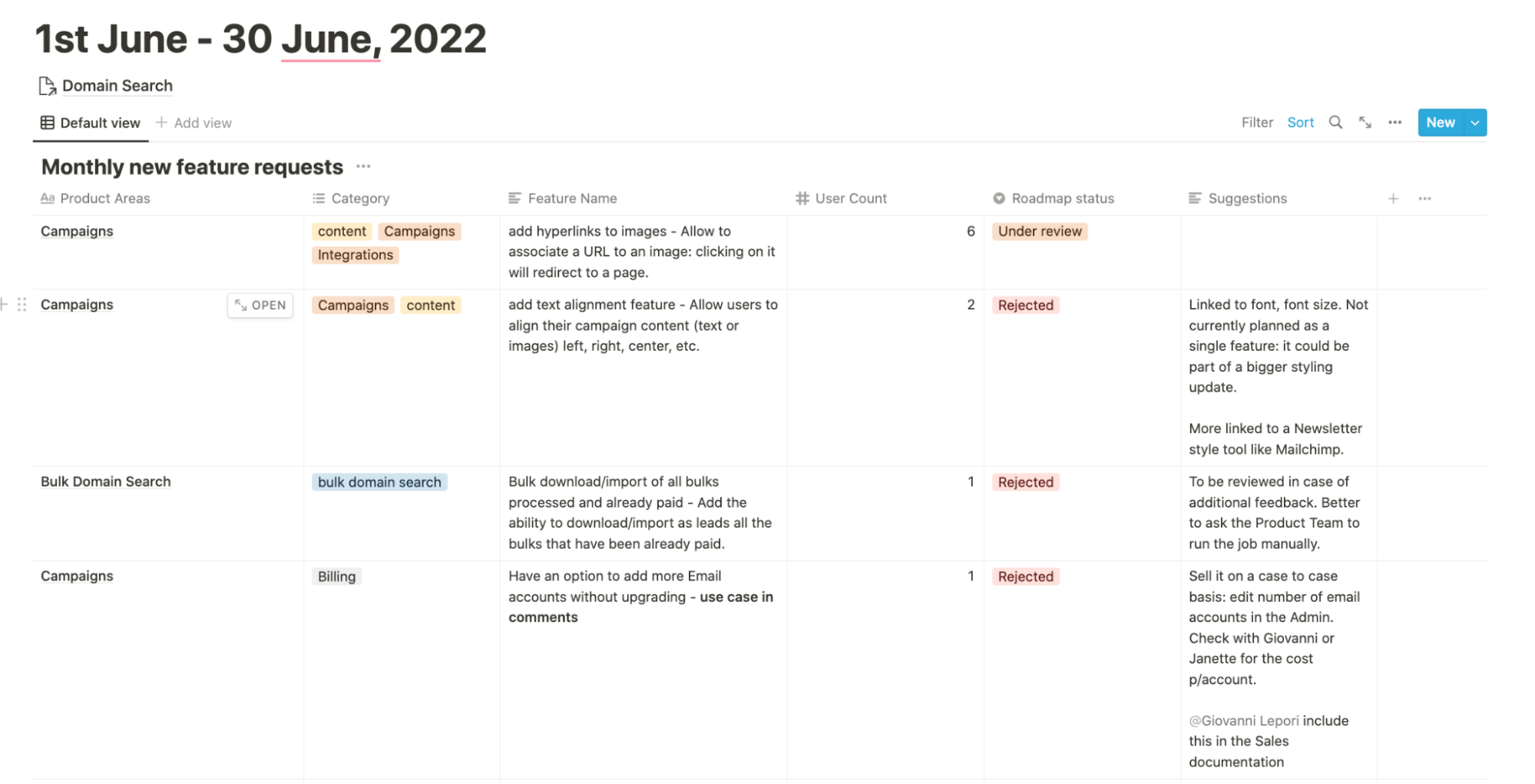

Lastly, we generate a monthly roundup report in Notion with the most requested features and new suggestions. The product team reviews each feature request and evaluates it based on the company’s current goals, vision, and resources.

We give each feature request a status, which can be “Planned” if it is in the short-term roadmap, “Future” if we like the suggestion but can’t or don’t want to implement it just yet, and “Rejected” if this is not a feature we want to implement in our app.

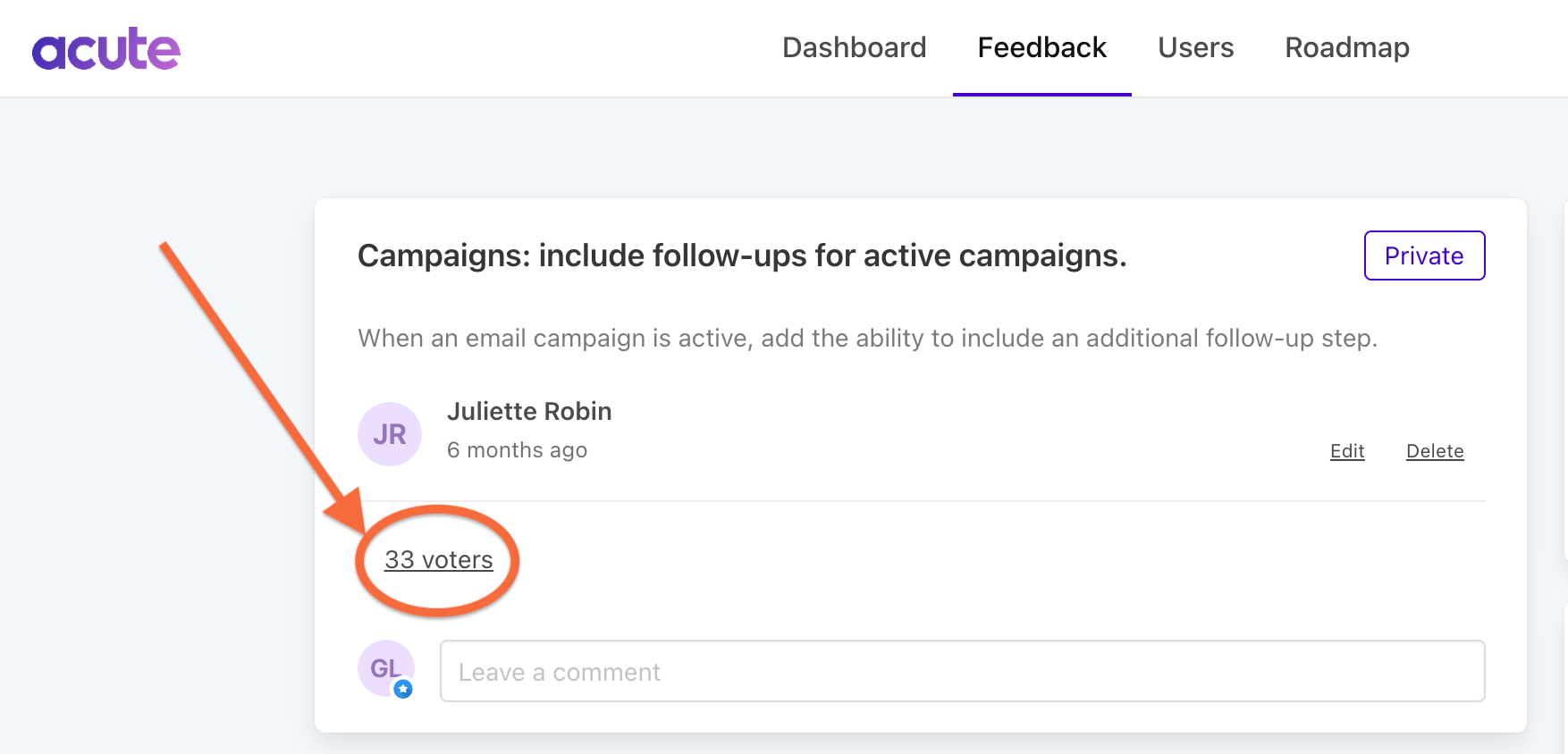

Occasionally, we look at the features that have the most votes to see if this is something we want to implement soon.

Next, if we implement a feature, we directly contact the list of users who voted for the feature to let them know it’s available.

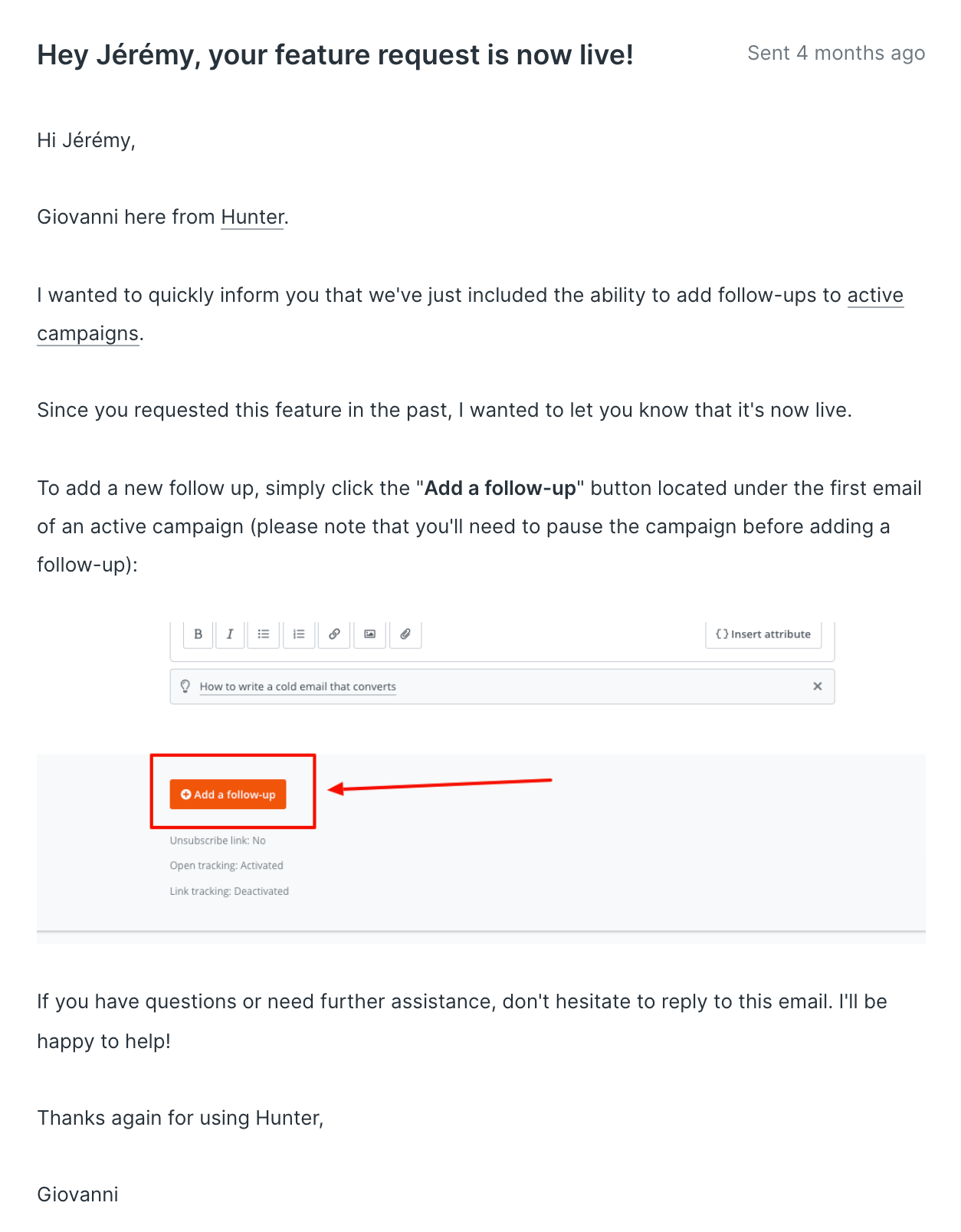

Communicating when a feature request goes live

Whenever a new feature is released by our Product Team, we directly inform users who have requested it so that we can allow them to have a better Hunter experience immediately.

To do this, we simply export the voters from Acute and update the status of the suggested feature to “Complete.”

Hunter Campaigns is our preferred tool to create an email campaign simply and quickly. By using dynamic attributes such as "first name" in the content, we can also ensure that each email sent will be highly personalized.

Over the years, we’ve noticed that using a subject line like “Hey {First Name}, your requested feature is now live!” leads to high open rates. We also tend to go straight to the point and include a screenshot or link to a help guide to provide a visual reference for the new functionality.

After sending the email campaign, all we have to do is support users who have questions or need clarification.

Using a similar technique takes little time but can be of great help when it comes to creating better relationships with users: in many cases, they are surprised to receive an email as the majority of tools they’re using don’t take the time to do this.

As a new feature may also be useful to users who haven’t directly requested it, our marketing team also posts regular product updates on our blog and sends out product update newsletters.

Collecting customer feedback is crucial for improving your product

We hope all these examples will inspire you to ask for more feedback from your users.

In the end, they’re the ones using your product daily, and although they may not know the ins and outs of your company’s goals, getting their input is crucial in maintaining a great service and product.

Send cold emails with Hunter

Send cold emails with Hunter