A few weeks ago, we migrated most of our service to GCP. We learned a lot during this change to the infrastructure. Overall we’re very happy with the change but there’s, of course, some nuance. Here are some of our main findings.

The load balancer brings significant speed improvements

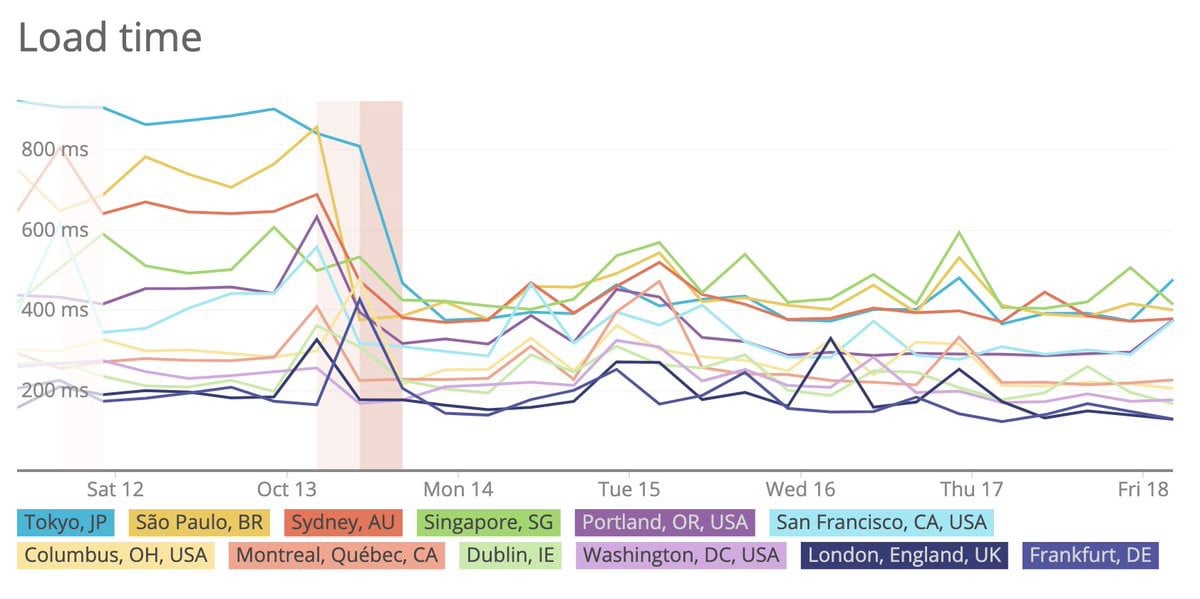

After the migration, we were surprised to notice a substantial improvement in response times for users in the US and Asia (far away from our servers).

The explanation is most likely that Cloudflare establishes a TLS connection to GCP near the user. The rest of the route to our servers doesn’t require opening up a new encrypted connection with multiple round trips.

This improvement is significant. Considering the price of the load balancer is very reasonable, it was a welcomed surprise.

Beware of high costs from small features

With GCP, AWS, and others, you pay for every single operation, even if it’s tiny. Overall, I think it’s a good thing. When you don’t pay for something, can you really expect a high level of performance? (We’ve been burned by this in the past).

The downside is unexpected costs. You need to look at the pricing in detail to avoid surprises later on.

For example, we added IP filtering to our load balancer to ensure only Cloudflare can connect. This minor change actually costs $0.75 per million requests which can quickly cost a lot if you have a heavily used API.

In practice, we noticed an increase in our hosting fees of around 30 to 40% since the migration compared to OVH. Considering all the benefits of GCP, we feel at the moment it’s a good investment.

Multi-zone isn’t necessary in most cases

Initially, our deployment was going to be spread out across to zones to improve redundancy. But considering bandwidth between zones isn’t free ($0.01 per Gb feels expensive for an internal network!), and that we have a very high amount of internal network activity, we decided to move to a system with only one zone.

In practice, we don’t expect any significant downside from this reduced-redundancy. It would take a full data center breakdown for the application to go offline. And even then, our backups are available in other zones making a recovery reasonable.

GKE is easy to manage and great at cutting costs

Kubernetes is a Google project. GKE is, therefore, unsurprisingly well implemented. We’ve never had a problem with it. In particular, the custom dashboard, logging, secrets encryption, and easy automated upgrades are great benefits.

But the most significant advantage has been costs optimizations. GKE allows creating node pools with low-costs preemptible instances. If you have a workload that can scale efficiently based on the number of available instances, GKE runs them on those low-cost instances with minimal configuration.

We’ve also noticed that the cluster is very efficient at moving pods to a smaller number of nodes to save costs. Again, the beautiful thing is that you don’t have to configure anything, your cluster will by default try to minimize billings!

Private networking is great for security and to limit costs

We’ve had problems in the past with private networks: Hard to configure, not enough bandwidth, not exactly private, etc.

From our testing, GCP private networks are very low latency, high throughput, and super easy to use. This is most likely something we won’t have to think about any longer.

Service accounts are both easy to use and great for security

We partly used AWS a while back and had bad memories of the complexity related to creating credentials and managing permissions for an application.

Thankfully, GCP has what they called Service Accounts. They’re well implemented and make it remarkably easy to ensure resources are only available to the right application.

The managed PostgreSQL is too locked-in

We intended to use the Cloud SQL offering for our databases. But with PostgreSQL, there’s no way to migrate an existing database without a substantial downtime. Migrate away from Cloud SQL would also be difficult, especially with a large database.

To avoid being locked in, we decided to keep managing PostgreSQL for the time being.